Streamlining Your Machine Learning Journey: The Ultimate Technologies Landscape for Efficiency and Scale

Publication Date

May 21st, 2024

Category

Blog

Reading Time

1 min

Author Name

Data Science Wizards

The fields of data science and artificial intelligence (AI) are rapidly evolving, with a dynamic array of technologies driving progress. From foundational algorithms to cutting-edge machine learning models, these tools are transforming how we analyze data and build intelligent systems. Lets understand a few prominent tools and technologies in this landscape.

Landscape of Technologies

- Data Pipeline

A data pipeline in any data science project is a set of processes and tools used to collect raw data from multiple sources, transform and clean it, and move data from various sources to its destination, often a machine learning model or a database. By automating these tasks, data pipelines streamline the data reparation phase, enabling data scientists to focus more on analysis and model building. Ultimately, data pipelines facilitate the extraction of valuable insights from raw data, powering informed decision-making. They enable companies to avoid the tedious and error-prone process of manual data collection and instead have constant access to clean, reliable, and up-to-date data that is essential for making data-driven decisions.

- Apache NiFi

Apache NiFi is an open-source data integration tool designed to automate the flow of data between systems. It provides a web-based interface for designing data flows, enabling users to drag and drop processors to create complex data pipelines. NiFi excels in real-time data ingestion, transformation, and routing, handling diverse data formats and sources. Its robust scheduling and backpressure mechanism ensure efficient data processing. In a data engineering pipeline, NiFi plays a critical role by streamlining data movement, ensuring data integrity, and facilitating seamless integration between various data sources and destinations, thereby enabling real-time analytics and operational intelligence. Its key features include guaranteed delivery, data buffering, prioritized queuing, and data provenance. NiFi is particularly useful for handling large volumes of data, ensuring scalability and reliability in the data engineering pipeline.

- Estuary Flow

“Estuary Flow” is a modern data engineering technology designed for orchestrating and automating data workflows. It enables the creation of scalable, fault-tolerant pipelines for ingesting, processing, and transforming data across various sources and destinations. With its intuitive interface and support for DAG (Directed Acyclic Graph) workflows, Estuary Flow streamlines data operations, ensuring efficient data movement and processing. Its role in a data engineering pipeline is pivotal, serving as a backbone for orchestrating tasks, managing dependencies, and monitoring workflow execution. Estuary Flow facilitates the seamless integration of disparate data sources, enabling organizations to derive actionable insights and drive data-driven decision-making processes.

- PostgreSQL

PostgreSQL, often referred to as Postgres, is an open-source relational database management system known for its robustness, extensibility, and adherence to SQL standards. In a data engineering pipeline, Postgres plays a fundamental role as a storage and processing engine. It efficiently stores structured data, offering powerful features for data manipulation, indexing, and querying. Data engineers leverage Postgres to persist and manage various types of data, from transactional records to analytical datasets. Its reliability and scalability make it a popular choice for data warehousing, operational databases, and serving as a backend for diverse applications within data ecosystems.

- Feathr

Feathr is an open-source feature store that focuses on providing a scalable solution for feature engineering and management. It enables efficient feature engineering and management in the data engineering pipeline. It provides a user-friendly interface for defining, computing, and serving features for machine learning models. Feathr abstracts the complexity of feature engineering, allowing data scientists to focus on building models rather than managing data pipelines. By centralizing feature definitions and computation, Feathr reduces engineering time and improves the performance of ML applications. It supports a wide range of data sources and can handle both batch and streaming feature computation.

2. Modelling and Deployment

Machine learning modeling involves training and validating models to solve specific problems. Deployment involves integrating these models into production environments where they can take in inputs and return outputs, making predictions available to others.

- JupyterLab

JupyterLab is an interactive development environment that facilitates data exploration, visualization, and prototyping of machine learning (ML) models. It offers a web-based interface for creating and sharing notebooks containing live code, equations, visualizations, and narrative text. In ML modeling, JupyterLab serves as a versatile workspace where data scientists can iteratively build, train, and evaluate models. JupyterLab interactive notebooks allow for immediate feedback, enabling practitioners to explore data, fine-tune algorithms, and communicate insights effectively, thus accelerating the development and deployment of ML solutions.

- Ceph

Ceph is an open-source, distributed storage platform that provides object, block, and file storage services. It employs a resilient architecture that ensures data redundancy and fault tolerance through replication and erasure coding. It is designed to be highly scalable, reliable, and self-managing, making it suitable for large-scale storage deployments. Ceph’s object storage system, known as RADOS (Reliable Autonomic Distributed Object Store), stores data as objects in a flat namespace. It uses the CRUSH algorithm to distribute data across the cluster, ensuring high performance and fault tolerance. The Ceph Object Storage Gateway (RGW) exposes the object storage layer through S3 and Swift-compatible APIs, allowing seamless integration with various applications and services. It ensures data availability and durability, supports seamless scalability, and integrates well with cloud-native environments.

- Polyaxon

Polyaxon is an open-source platform for managing, orchestrating, and scaling machine learning and deep learning experiments. It offers a user-friendly interface for data scientists, team leads, architects, and executives, providing features like tracking, orchestration, optimization, insights, model management, artifacts lineage, collaboration, management, compliance, and scalability. Polyaxon supports major deep learning frameworks and libraries, allowing seamless integration with various tools and frameworks for building, training, and monitoring machine learning models at scale. It integrates seamlessly with Kubernetes, enabling scalable and reproducible ML workflows. In the model development pipeline, Polyaxon plays a crucial role by allowing data scientists to efficiently run, monitor, and compare numerous experiments, automate workflows, and manage resource allocation. This facilitates collaboration, improves productivity, and ensures the reproducibility and scalability of machine learning projects.

- Kubeflow

Kubeflow is an open-source platform that simplifies the deployment and management of machine learning (ML) workflows on Kubernetes. In the model deployment process, Kubeflow plays a crucial role. It allows data scientists and ML engineers to package their models as Docker containers and deploy them to Kubernetes clusters. Kubeflow Pipelines, a key component, enables the creation of reusable and scalable ML workflows, facilitating the automation of model training, evaluation, and deployment. This helps organizations achieve consistent, reliable, and reproducible model deployment, while also enabling collaboration and monitoring throughout the ML lifecycle.

3. ML Monitoring

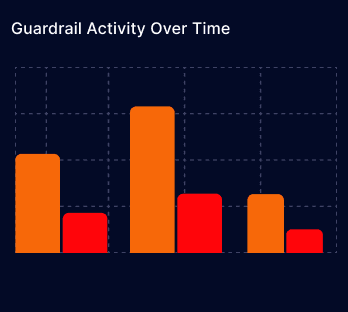

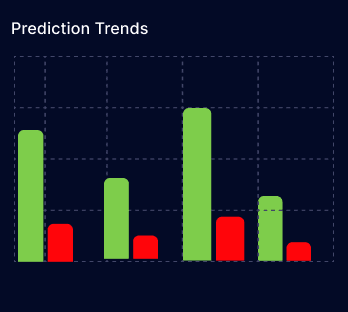

ML monitoring is the practice of continuously tracking the performance of machine learning models in production to identify potential issues, such as data drift, concept drift, and model fairness, and take corrective actions.

- SigNoz

SigNoz is an open-source observability platform designed for monitoring distributed systems, including machine learning models. It collects, analyzes, and visualizes metrics, traces, and logs in real-time to provide insights into system performance and behavior. SigNoz enables monitoring of model inference latency, error rates, and resource utilization, helping detect anomalies and performance degradation. It supports the tracing of requests across microservices, allowing for end-to-end visibility in model serving pipelines. In the model monitoring pipeline, SigNoz plays a crucial role by providing actionable insights into model performance, facilitating troubleshooting, optimization, and ensuring the reliability and effectiveness of machine learning applications.

- Zabbix

Zabbix is a comprehensive open-source monitoring solution that plays a crucial role in the Model Monitoring pipeline. It offers a wide range of features for monitoring the health, performance, and availability of models in production. Zabbix collects metrics from various sources, including models, and provides advanced visualization capabilities through customizable dashboards. It supports complex event processing, allowing users to define rules for detecting anomalies and triggering alerts. Zabbix’s scalability and flexibility make it suitable for monitoring models in large-scale environments, ensuring reliable and efficient model deployment and operation.

- Fiddler AI

Fiddler AI is an open-source AI observability platform that plays a crucial role in the Model Monitoring pipeline. It provides a comprehensive solution for monitoring, analyzing, and explaining machine learning models in production. Fiddler AI offers features like data drift detection, data integrity checks, outlier identification, and real-time alerting to help teams catch issues early. It supports a wide range of models, including natural language processing and computer vision, and integrates with popular ML frameworks. By providing actionable insights and enabling collaboration, Fiddler AI helps organizations deliver high-performing, responsible AI applications faster. It plays a crucial role by enabling organizations to continuously monitor and optimize their machine learning models, ensuring they remain accurate, fair, and reliable throughout their lifecycle, thereby enhancing trust and mitigating risks.

Streamlining the entire landscape with DSW UnifyAI – Enterprise GenAI platform

The primary objective of the DSW UnifyAI platform is to establish a centralized machine learning platform that harnesses multiple technologies to streamline and simplify the process of building, experimenting, deploying and managing machine learning models into production. By abstracting away the complexities inherent in model deployment, DSW UnifyAI aims to accelerate and smooth the transition from model development to operational deployment. The platform facilitates several key functions, including but not limited to:

Centralization: DSW UnifyAI consolidates various aspects of the machine learning lifecycle, including data preparation, model training, evaluation, and deployment, into a single, cohesive platform.

2. Integration of Technologies: It seamlessly integrates multiple technologies and tools required for different stages of the machine learning pipeline, ensuring interoperability and efficiency.

3. Abstraction of Complexities: DSW UnifyAI abstracts away the intricate details and technical challenges involved in model productionisation, allowing data scientists and engineers to focus on model development and business insights rather than deployment complexities.

4. Swift Productionisation: By abstracting heavy complexities, DSW UnifyAI enables rapid and efficient deployment of machine learning models into production environments, reducing time-to-market and accelerating the realization of business value from AI initiatives.

5. Seamless Workflow: It facilitates a smooth workflow from model development to deployment, providing tools and features to streamline collaboration, version control, testing, and monitoring of deployed models.

6. Scalability and Flexibility: DSW UnifyAI is designed to scale with the organization’s needs, supporting the deployment of models across diverse environments, from cloud to on-premises infrastructure, and accommodating various deployment scenarios.

Overall, the DSW UnifyAI platform serves as a comprehensive solution for organizations seeking to leverage machine learning effectively, offering the tools and technologies needed to operationalize models efficiently and effectively. The platform facilitates the following features for building end to end AI use cases:

- Data Ingestion toolkit to acquire data from varied source systems and persists in the DSW UnifyAI for building use cases.

- Feature Store for feature engineering, feature configuration to serve features during model development/inference.

- Model Integration and Development for the data scientist/user to experiment, train AI models, and store the experiment results in the model registry. Followed by choosing a candidate model for registration along with versioning capability through metrics comparison.

- AI Studio to facilitate the automatic creation of baseline use cases and boilerplate code in a matter of few clicks.

- GenAI to help generate quick data insights through conversational interaction on user-uploaded data.

- Model Repository to enable Data scientists/users to manage and evaluate model experiments.

- One-click model deployment through the UnifyAI user interface.

- A user-friendly user interface to evaluate and monitor model performance.

Want to build your AI-enabled use case seamlessly and faster with DSW UnifyAI?

Authored by Hardik Raja, Senior Data Scientist at Data Science Wizards (DSW), this article delves into the realm of various technologies in data science and machine learning projects, covering the entire landscape from data engineering to model deployment to model monitoring. It also showcases how this entire process of machine learning project lifecycle can be streamlined through the DSW UnifyAI platform. Using it, enterprises can accelerate the development cum deployment of machine learning solutions, paving the way for enhanced efficiency, robustness, acceleration and competitiveness.

About Data Science Wizards (DSW)

Data Science Wizards (DSW) is a pioneering AI innovation company that is revolutionizing industries with its cutting-edge UnifyAI platform. Our mission is to empower enterprises by enabling them to build their AI-powered value chain use cases and seamlessly transition from experimentation to production with trust and scale.

To learn more about DSW and our ground-breaking UnifyAI platform, visit our website at www.datasciencewizards.ai/. Join us in shaping the future of AI and transforming industries through innovation, reliability, and scalability.

Get a Full Demo

Fill out the form

Get a Walkthrough

Fill out the form